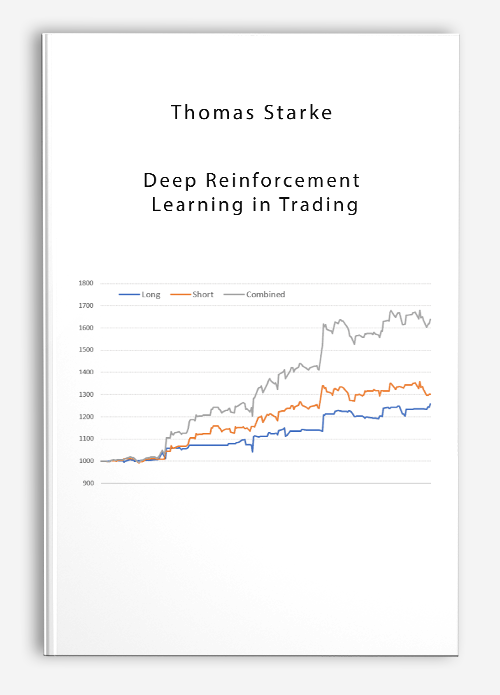

Thomas Starke – Deep Reinforcement Learning in Trading

$55.00

Enumerate and elucidate the requisites for reinforcement learning to successfully withstand the delayed gratification test

Deep Reinforcement Learning in Trading necessitates a foundational comprehension of financial markets, encompassing the buying and selling of securities. For executing the covered strategies, a prerequisite is the basic grasp of “Keras,” “matplotlib,” and “pandas dataframe.” The essential proficiencies are encompassed within the free courses ‘Python for Trading: Basic’ and ‘Introduction to Machine Learning for Trading’ available on Quantra. To delve deeper into Neural Networks, enrolling in the ‘Neural Networks in Trading’ course is recommended, though it remains optional.

Could you please provide more context or information about Thomas Starke?

Dr. Thomas Starke, in his capacity as the Chief Executive Officer of the financial advisory firm AAAQuants, has played a pivotal role. He boasts an illustrious career, contributing significantly to the development of high-frequency stat-arb strategies for index futures and an AI-based sentiment strategy. His journey includes associations with esteemed organizations like Boronia Capital, Vivienne Court Trading, and Rolls-Royce. In the academic sphere, Dr. Starke has served as a Senior Research Fellow and Lecturer at Oxford University. Beyond his financial acumen, he is an avid tech enthusiast, deeply intrigued by emerging technologies like artificial intelligence, quantum computing, and blockchain. His academic journey culminated with a doctorate in Physics from Nottingham University (UK).

Deep Reinforcement Learning in Trading alongside Thomas Starke This course will provide insights into the applications and effectiveness of employing RL (Reinforcement Learning) models in the realm of trading. The Deep Reinforcement Learning model in question has harnessed the knowledge gleaned from over 100 research papers and articles. It underwent numerous iterations on well-established synthetic patterns to finalize aspects like experience replay, hyperparameters, Q-learning, and feedforward networks. By the end of the course, you will have acquired the skills necessary to construct a comprehensive RL framework from the ground up and apply it in a practical capstone project. The culmination of this course will enable you to implement the model in actual live trading scenarios.

Enumerate and elucidate the requisites for reinforcement learning to successfully withstand the delayed gratification test. Illustrate concepts such as states, actions, double Q-learning, policy, experience replay, and rewards. Examine the trade-off between exploitation and exploration. Develop and subject to testing a reinforcement learning model. Assess returns and risk utilizing diverse performance metrics. Apply these principles to real market data via a capstone project. Discuss the challenges encountered in live trading and propose potential solutions. Execute the RL model for live trading and paper trading alike.

More courses from this author: Thomas Starke

Be the first to review “Thomas Starke – Deep Reinforcement Learning in Trading” Cancel reply

Related products

Forex - Trading & Investment

Forex - Trading & Investment

Options University – Technical Analysis Classes (Video, Manuals)

Forex - Trading & Investment

Reviews

There are no reviews yet.